Yay! A related topic to the previous post! Today, Kruskal's Algorithm for finding Minimum Spanning Trees from weighted, undirected graphs.

Prerequisites

For this topic to make sense, you should be aware of a few things. If I have covered them on this blog, you can check that up, otherwise Google them before continuing! You have been warned.

- Basics of Graphs: The absolute essentials of graph theory are necessary. Basically, we're gonna be working with undirected, weighted, connected graphs. A graph is a data structure consisting of elements called vertices or nodes and connecting elements called edges.

- Minimum Spanning Trees: Kruskal's algorithm is used to construct minimum spanning trees from graphs. Read it here.

- Basics of Set Theory: Okay, this is elementary math. Please tell me you know set theory. Just the definitions, unions, etc. I am not really gonna cover any bit of set theory in this blog. Go open your 8th grade math textbook.

- Any ONE Sorting Technique: Anything. Quicksort. Merge Sort. Insertion Sort. Heck, even bubble sort. Anything at all goes.

If you have cleared the above prerequisites, you are good to go.

Overview

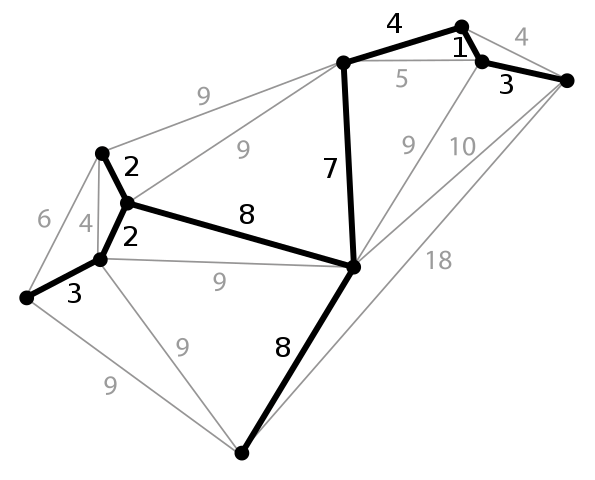

Kruskal's algorithm works with edges as the emphasis of the graph. The basic idea is quite simple and easy to grasp. Note that the "tree", at the beginning, is empty.

- Take a list of all the edges

- Sort them according to weights

- Taking the next minimum edge, add it to the tree if it does NOT form a cycle with the existing tree.

That's it. This process is repeated for all edges. We can quite plainly see that this will generate a minimum spanning tree, as all edges (and hence all vertices) are covered, and also we make sure we pick the minimum possible edge without forming a cycle every time.

Sounds good? Great. Now tell me, how the heck are you going to check for the existence of a cycle? Remember that the loop step is not really as simple as it sounds: you have to make sure you do not form any cycles.

For example, consider the following simple graph.

Now in the case of the above graph, let us assume weights are in the order a<b<c<d. Hence, according to the logic followed by Kruskal's, a is added first, followed by b, and then c. After this, however, there is a problem. The edge d has two end nodes (remember that an edge only contains three pieces of information: the two end nodes and the weight of itself). These two end nodes are such that one is connected to a and the other is connected to b. Now adding of d is NOT feasible as it will form a cycle: in other words, the two nodes at its ends are already connected by a path: namely a-c-b. The question here is: how do we detect this pre-existing path in the tree?

Enter the Union-Find Algorithm.

The Union-Find Algorithm

What? What the hell? Another algorithm? Weren't we just doing Kruskal's?

Well, yes, yes and yes. The Union-Find Algorithm is essential to the operation of Kruskal's. You'll see why: first we need to forget all about the main Kruskal's operation and focus exclusively on this.

The basis of this start from Set Theory. Yes, that seemingly easy and unimportant section of math (which incidentally has everything to do with the foundations upon which Mathematics itself is built) plays a critical role in the finding of MSTs. The reason for this is simple... in an undirected graph, the whole basis of connectedness is that there is no order, just like sets.

Confused? Okay, let's clear this out. Suppose that whenever two nodes were made connected in the tree, I put them in the same set (initially, assume each node is in its own set with only one element). Now if I take two nodes and try to connect them, there are two possibilites:

- The two nodes are in two different sets, which means on connecting them we can merge the two sets they are in, to form one set.

- The two nodes are in the SAME set, which means they are already connected by some path.

In Case 1, the two nodes are previously unconnected, so that's fine: we can connect them. But in Case 2, if you try to join them, we see that they are already in the same set, which means they are already (indirectly) connected. BOOM! Cycle! Avoid!

Notice that the fun comes from the fact that sets enforce no order. Therefore we can state that if two elements are in the same set, they are somehow connected. How they are connected is of no concern to us. What matters is that they are already connected and it will not help us by adding another edge, as that will form a cycle.

From the above, we can elaborate a bit more on Kruskal's basic algorithm (Last bit of Kruskal's in this subsection, I promise):

- Take all edges in an array

- Sort that array (in an ascending order)

- Take the next (minimum edge), examine its end nodes:

- If they belong to different sets, merge their sets and add that edge to the tree

- Otherwise skip the edge

- Print the tree obtained.

Our real problem has now been dumbed down considerably. All we have to do is to figure out a method to check which set an element belongs to, and then another method to merge two sets. Here is where the Union-Find Algorithm truly steps in (Haha, I know I gave quite a buildup earlier, but seriously, it does step in here).

The Union-Find Algorithm (for realsies)

The Union-Find algorithm consists of two methods: Union and Find (well, duh). The two methods are used for two different actions: one for merging two sets using the Union operation, and the other to find which set an element belongs to.

Let us discuss the Find operation first. How do we find which set an element lies in? To find this, first we need to address another question: how are we going to store a set in a computer algorithm? Sets are not exactly straightforward to store in a system: a set implies no order between its elements. Also, how are we going to represent a set? For this, let us see how we represent sets in Math.

A set is usually represented by a letter placeholder. In other words, we define sets as, say set P = {1, 2, 3} is a set of 3 elements. In this sense, sets are akin to variables.

However, for our purposes, we know that any one element can only be in one set at a time. This is because our set definition puts all connected elements in the same set. If an element (vertice) x is a part of two different sets A and B, then this means that x is connected to all elements in A as well as all elements in B. This further means that all elements in A are connected to all elements in B (via x). Hence, the two sets A and B are actually the same set, and not two different sets (This, in Mathematics, is called a proof by contradiction, by the way.).

Now as we have quite firmly established that any element can only belong to one set at a time (for the purposes of the Kruskal's algorithm), we can define the set a bit differently. For example, we can call the set P above as "The set containing the element 1", or, "The set containing the element 2", or, "The set containing the element 3"; regardless, we know that any one of these three definitions can only lead to a single, unique set (as every element is in only one set at a time). This solves the problem of defining an arbitrary number of variables to denote a number of sets (We do not know how many sets may be present at any particular step during the program: only that there are n sets in the beginning and 1 set at the end, where n is the number of nodes in the graph).

However, we are faced with a new problem. The set P above can be instead represented by one of its elements. The question is: which element? We now have three different definitions for the same set, because it contains three elements. To solve this, we simply do what the Mafia does: assign rank to elements within a set.

Element Ranking and FIND

What does the Mafia have to do with this, you ask? This is my analogy for a set representation. Consider a gang with x members. Out of the x members, the member who represents the entire gang is the one with the highest rank. The gang may have a hierarchy, with one member being the "rep" (representative: or boss, or superior, or senior, or whatever) of another. This hierarchy extends all the way to the "boss rep", who is identified by two characteristics: he has the highest rank in the gang, and he is his own "rep". In other words, the gang may be represented like this:

In the image, the arrows represent the link upwards in the hierarchy. Members on higher levels have higher ranks than elements on lower levels. Remember that the member at the top is his own representative, it does not mean that he has no rep.

We can store elements in the set similarly, with a rank defined for each element and a "rep" value. As there are a maximum of n elements (where n is the number of vertices in the graph), all we need is an array of n elements, where each element stores the node data, the "rep" index, and the rank.

In this sense, the representative element of the entire set is the element whose "rep" is itself. Thus, we have the first Find algorithm.

Find (x) :

t := x

while (t.rep is not t):

t = t.rep

return t

Now, consider THIS set:

If we wish to find the gang boss of the above set, starting from the lowest member, you'd have to traverse up a lot of levels. Remember, however, that in our set, order does not really matter. All we need is the main "boss rep", while all levels under it do not really matter in hierarchy. Think of it as a very loosely-organized gang: different people report to different people, but the important thing is that the message finally arrives at the boss.

Now in such a situation, every time a call is made to retrieve the boss of a set via another element, we can reorganize the set to make the element (and all its superiors) report directly to the boss, so that the call requires only a single upward reference to be made the next time it is called on them.

To elucidate, consider the above image. In the call to find the boss, if we reorganize the gang such that it now looks like this:

Any subsequent call on a lower member requires only constant time (1 step) to find the set's boss. The process has been made much faster on subsequent calls on the same elements, as there is now no requirement to go through unnecessary steps through middle-ranking members.

Hence we arrive at the second, faster version of the Find algorithm, the Find Algorithm: Mark 2.

Find (x) :

# x is the element whose set (boss) we have to find

if (x is not x.rep):

x.rep = Find(x.rep) # the recursive call finds the main boss and sets it as the rep of the current element: reorganization

return x.rep # x's rep is now the main boss: return it

The recursive step in the middle is called the path-compression step. We compress the path recursively for x and all its superiors all the way up to the top and set their representatives as the main boss. When the main boss is encountered, it is equal to its rep, so it returns itself.

The above is the final version of the Find Algorithm.

Gang Fights and UNION

Let's extend the Mafia analogy a bit further: what happens when we force two gangs to get connected? A gang fight, that's what. Except...

In our gang-world, we assume that two gangs fighting is basically a rank-comparison challenge. The one with the higher rank wins. Now this makes sense... except, whose rank do we compare? Here is where our gang-world differs from real life: the whole gang is not involved in the dispute - only the leaders. We basically find the gang bosses of both gangs, compare their ranks, and make the new overall gang boss the one with the higher rank.

This is cool and everything, but what if their ranks are equal? Well, then we introduce some "bias": say, we always pick the first gang to win whenever the ranks are equal for both gang-bosses. However, there is a slight dilemma. The new overall gang-boss (the boss of the first gang) should possess a rank greater than anyone else. So, we simply increase his rank by one, so that his new rank is now greater than his old rival's.

You can see how this gang-merging is similar to the Union procedure in sets. The elements belonging to two disjoint sets are now part of the same set, with the representative element of the new combined set made to the representative element of one of the older sets. In pictures, the Union / Gang-merging procedure is as follows:

Note that the gang bosses don't really have any special properties allotted to them. They are shown in a different style for the purpose of representation.

Therefore, the Union of two different sets with bosses aBoss and bBoss respectively is as follows:

Union (aBoss, bBoss) :

# inputs: aBoss and bBoss are two elements of DIFFERENT sets

if aBoss.rank > bBoss.rank :

bBoss.rep = aBoss

return

else if aBoss.rank < bBoss.rank :

aBoss.rep = bBoss

return

else :

# ranks are equal

bBoss.rep = aBoss

aBoss.rank += 1 # increase rank of aBoss

return

And now, we have completed the Union-Find Algorithm.

I'm baaack! ~ Kruskal's Algorithm

If you think back to when we began, we had ended up with this series of steps for Kruskal's algorithm:

- Take all edges in an array

- Sort that array (in an ascending order)

- Take the next (minimum edge), examine its end nodes:

- If they belong to different sets, merge their sets and add that edge to the tree

- Otherwise skip the edge

- Print the tree obtained.

Following that exact series of steps, we can actually proceed to write Kruskal's algorithm directly. Well, almost.

Almost? Aren't we done? Well, not entirely enough. Look at step 3.1 above, for instance. First, we have to check if the two elements belong to different sets. How do we do that? Well, we've already done that. Remember that a set is defined by the "boss rep" element in it. Therefore, if two elements have the same boss rep, they belong to the same set.

Yeah, now we're done. Proceeding with the algorithm!

Kruskal (A, n) :

# A is the array containing all edges in the graph

# n is the no of nodes (vertices)

T := new tree # declare empty tree

graphNodes := new Array (n)

for (i in [0..n-1]):

graphNodes[i].rank = 0

graphNodes[i].rep = graphNodes[i]

qsort(A) # in-place algorithm to sort array A by weight

for edge in A:

a := edge.end1 # one endpoint node index

b := edge.end2 # other endpoint node index

aBoss := Find (graphNodes[a])

bBoss := Find (graphNodes[b])

if aBoss == bBoss :

# bosses are equal, so same set

break # skip the edge

# aBoss and bBoss are different

T.addEdge (edge)

Union (aBoss, bBoss) # join the sets

T.printAll()

Note that the tree T can be something as simple as an array of edges with weights and end nodes recorded. The structure of the tree is left up to the implementation and use.

Time Complexity

The time taken by the above algorithm can be calculated as follows:

- Line 5:

O(1)

- Line 6 - 10:

O(V), where V is the number of nodes

- Line 12:

O(E log(E)), where E is the number of edges (this is true on using Quicksort. Technically this value depends on your sorting technique. Now would be a good time to stop using bubblesort).

- Line 14 - 27:

O(E log(V)) (Don't ask me how this works out. The discussion of complexities of the Find algorithm is pretty... complex. There's an entire chapter dedicated to this in CLRS. Someday, when I figure this out, I'll update this post.)

Summing it all up, we have a total complexity of O(E log(V)).

Kruskal's algorithm works better for sparser graphs, as the operation runs based on number of edges in the graphs. For denser graphs, it would be better to use another algorithm, namely Prim's algorithm. But that discussion, another time.

Written with StackEdit.